The TYPO3 core CI uses the sysbox OCI runtime to run unprivileged DinD containers and some helper containers to increase performance.

TYPO3 core testing infrastructure – Part 3

Secured docker-in-docker with sysbox

This post is part of our TYPO3 core testing series

Introduction

We’ve had a look at systems involved in the testing setup with part 2. The true magic however is in the docker related details. Let’s have a look.

runTests.sh

The easiest way to execute core testing on a local developer machine is the script Build/Scripts/runTests.sh: It executes everything using docker images and thus creates a well defined and re-playable setup isolated from the host machine. Only docker and docker-compose are needed locally.

One major goal for a new automated testing core setup was to do the exact same thing in CI as well: When something fails in CI, developers should be able to easily reproduce the issue locally.

The fun part with runTests.sh is that it creates a full network with multiple services to run tests - for instance databases for functional tests and a selenium chrome browser for acceptance testing. The script is designed to run one suite at a time.

When using this on a CI machine, multiple such scripts with different core versions and tests have to be executed simultaneously: They must not interfere with each other.

docker-in-docker (dind)

So runTests.sh creates containers to run tests. In CI, this script however is already within some container to not rely on the host system and to not collide with further jobs running on the same machine.

There are two basic solutions when a “creating container” needs to run “sub” containers:

- docker-out-of-docker (DooD): Start additional containers on the host by giving the creating container the hosts docker daemon socket. sysbox calls this “docker-out-of-docker”. In effect, sub containers run parallel to the creating container, in the same docker daemon.

The bamboo based CI followed this approach: A dockerized bamboo agent starts parallel test containers on the host. Next to hard to solve security issues, this approach gives quite some headaches with volume mounts: File structures mounted into sub containers are not relative to the creating container, but relative to the file position on the host system. Similar issues with networks: They need to be prefixed properly so a suite run does not destroy networks of other suites running in parallel. All that leads to trouble and is ugly. And it requires dedicated CI scripts - the opposite of what we wanted to achieve with runTests.sh.

When the bamboo setup was created a couple of years ago, this was the only viable option, though. A famous post outlined why the alternative wasn’t better. This however changed meanwhile. - “docker-in-docker” (DinD): The idea is simple - start a container that runs a docker daemon. Then make your container that executes runTests.sh to execute docker commands on this docker daemon which runs any needed service containers for the test execution. Both containers can run in an own network, fully separated from other hosts containers. The to-test source code is volume-mounted to both. This approach is followed with the GitLab based CI.

sysbox

DinD has a big caveat: To run properly, the container running the docker daemon must be a ‘privileged’ container. This effectively gives that container host root privileges. With these access rights, an attacker can easily break out of the container and become root on the host machine.

Luckily sysbox by nestybox nowadays comes to the rescue: This project provides an alternative docker OCI runtime - a docker runtime is the part of docker that performs the true heavy lifting and namespacing. The sysbox runtime is designed to properly implement kernel user namespaces: A container process is started as user “lolli” on the host, the container process is run as root, but that root is not identical to the hosts root, but just a “sub user” of “lolli”. This allows a non-privileged DinD container, solving the main security issue.

Note this small excerpt is just one sysbox feature: The runtime has many more features that docker missed to implement properly. For instance, it enables docker to run full virtual machines (like a full fledged ubuntu), but without the drawbacks of full virtualization like hard memory allocation. Have a look at the well explained sysbox blog series for more insights.

The setup can be found in the testing-infrastructure repository. A big shout out goes to the sysbox maintainers at nestybox for being exceptionally helpful with questions!

Runner layout

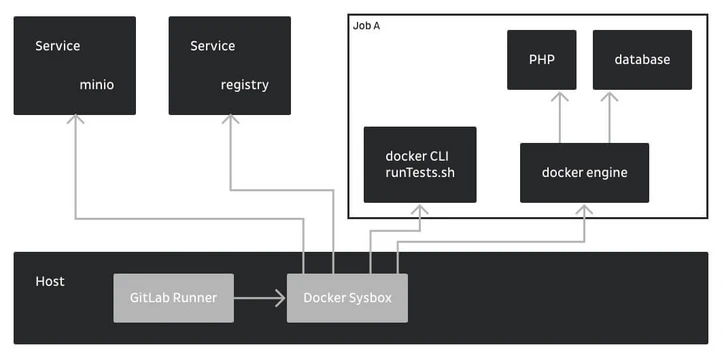

All that container nesting can be confusing at first. Let’s visualize the layout of a hardware runner.

- The GitLab runner runs on the host directly. It uses the ‘docker executor’, any test is started within a sysbox-enabled container. We use a docker base image that brings the docker CLI, slightly extended with docker-compose. Due to the gitlab-ci.yml specification, a docker-dind service is started as well. The runner takes care the test container and the service are in the same network and configures the CLI container to use the DinD service as docker endpoint.

- When runTests.sh is executed on the test container, the DinD service loads, unpacks and starts images needed for the test.

- When runTests.sh finishes, both containers are removed.

registry

So, for each and every job a new docker daemon is started. docker usually keeps images once they were downloaded to re-use that image when it’s needed another time. The DinD container however is naked each time it starts: It needs to download and unpack requested images for each job anew, which of course consumes time. Since many images are official vendor images, we’d run into docker hub API limits quickly.

To mitigate this, each hardware machine starts a caching image proxy. This is of course another container, based on the registry image. Both the host docker daemon and the DinD proxy are then configured to fetch images from this proxy. On hardware runners, this is a local network operation. With the cloud runner, the registry is run on the broker, so the job machines fetch images using the hetzner cloud network.

Note there is another option to mitigate the unpacking job: sysbox brings a happy little feature to preload the DinD image with inner images. Doing this for most often used images would avoid the image download and unpack cost (usually a couple of seconds). We didn’t end up using this yet, but it’s a good future option. But we optimized the image sizes: The typical PHP image is less than 200MB in size, which is ok and rather quick to unpack.

minio

A second service we start on the machines is minio: This implements a S3, used by GitLab for distributed caching: Nearly every job executes a “composer install”, and we really don’t want to download the packages each time. Similar things pop up with JavaScript modules and some other details. Some configuration options notify the GitLab runner about the available S3 cache upon start. When gitlab-ci.yml then configures caching, the runner will store stuff in this cache.

The setup on the cloud runner broker is similar again: The minio service is started on the broker and the job machines know the location of the S3 cache.

Summary

We hope this blog series gave a good overview of the new TYPO3 core CI testing infrastructure. There are tons of not mentioned details, the repositories give insights.

At the time of this writing the infrastructure already crunched about 140.000 jobs without major headaches.

The setup allows us to add or remove runners quickly, is fully transparent and does the exact same things a developer would do locally.

Due to the cloud runner, the setup scales automatically. This has already proven to be very useful with the latest security release: About 40 patches throughout various core versions had to be tested quickly, proving everything has been prepared correctly.

Testing and code development are symbiotic: Both require each other and both are changed together. The core CI journey will not end here, but the new infrastructure is a base we can rely on for a while.