The TYPO3 core CI uses the sysbox OCI runtime to run unprivileged DinD containers and some helper containers to increase performance.

TYPO3 core testing infrastructure – Part 2

The new Core CI setup

This post is part of our TYPO3 core testing series

System overview

We started in part 1 with some reasoning on why we’ve put our hands on the test infrastructure again. This part starts with technical details.

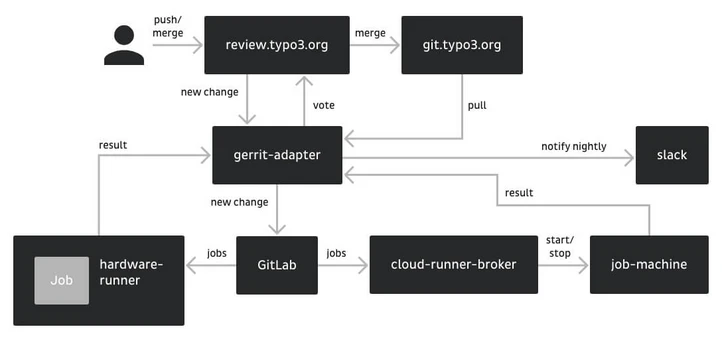

Let’s have a look which systems interact with each other.

- Patches, reviews and merges for the TYPO3 core are handled by gerrit located at review.typo3.org. It brings an efficient review interface that solves our needs perfectly.

- GitLab CI works best if test runners pull the to-test repository from GitLab directly. We thus established a small middleware to communicate between gerrit and GitLab.

- When a change is pushed to the review system, a gerrit hook notifies ‘gerrit-adapter’. GitLab has a ‘mirror’ repository of the core project, gerrit-adapter fetches the changes from gerrit and pushes it as a new branch to this mirror. This triggers the GitLab test pipeline for the new branch.

- When a change is merged in the review system, review.typo3.org applies the change to the official core repository on git.typo3.org (and the github mirror is updated). It also notifies gerrit-adapter about this merge. gerrit-adapter then updates the repository mirror on GitLab. This is done to always have an up-to-date version of the main repository branches like ‘9.5’, ‘10.4’ and ‘master’ on GitLab. We do this to ensure the extended ‘nightly’ test suite is always performed on the latest code version. Nightly pipeline runs are a scheduled task in GitLab for the branches maintained by the core team.

- A new branch in GitLab creates a test pipeline defined by the gitlab-ci.yml file. Available test runners then fetch single jobs and run them.

- A last test job notifies gerrit-adapter again, which then adds a positive or negative testing vote for this change on review.typo3.org, or notifies slack about the result of a nightly run.

Rough test job overview

Each change pushed to the review system runs through an excessive list of tests. At the time of this writing, we’re dealing with roughly these scenarios:

- More than ten thousand unit tests with different PHP versions.

- About four thousand functional tests, multiplied by three to five to test different DBMS like mariadb, postgres and others.

- A couple of hundreds acceptance tests, again with different underlying database systems.

- A set of various integrity tests like CGL compliance, phpstan, documentation details and others.

Some of these test suites (especially functional and acceptance) take hours if executed in one go. Since we want to have a full-suite result after a couple of minutes, we split those tests into multiple jobs. This currently turns into roughly sixty jobs to be executed for each change. This number is different depending on core branch, and changes frequently, for instance when a TYPO3 version starts to support additional PHP versions. The nightlies extend this even further by testing a series of more uncommon permutations.

A single job typically occupies at least one CPU - we really need some hardware to deal with this if we always want a reasonable quick testing result.

Hardware

The testing infrastructure boils down to these systems:

- gerrit-adapter: This does not need a huge amount of computational power - it’s an hook endpoint and does some operations like ‘git pull’ and similar - boring form a performance point of view. One of the tiniest hetzner cloud images is more than enough.

- GitLab instance: GitLab is relatively hardware greedy. A small instance should not start below 4GB of RAM and a couple of dedicated CPUs. Many thanks to the TYPO3 Server Team for taking care of this by hosting gitlab.typo3.org.

- Dedicated test runner hardware: We decided to have some ‘always available’ hardware to churn through the bulk of test jobs. At the time of this writing, this is one of the big hetzner AX161 AMD EPYC Rome 7502P with 32 hardware CPUs and 512GB RAM. It is configured to run 48 tests in parallel and thus picks more than ⅔ of jobs whenever a change is pushed to the review system. We picked that system since we didn’t know how much RAM is actually needed. With enough available data now, we will most likely change this to two or three stock AX101 AMD Ryzen 9 5950X in the near future for a better bang/budget ratio.

- Cloud scaling test runners: The dedicated testing hardware is overloaded as soon as multiple changes are pushed to the review system within a short timeframe. It is however not cost-effective to rent hardware dealing with all possible spikes. Neither it is to have bored developers waiting for test-suite results. GitLab thus provides an auto-scaling runner option: A dedicated runner starts cloud-machines when there are jobs left in the queue and stops them when they’re idling for too long. The hetzner cloud is the perfect platform for this.

Note

This view is biased: Christian Kuhn as the author of this blog series has been using hetzner.de services for about fifteen years and always received this provider as reliable. There are alternatives.

Hetzner cloud

hetzner.de is known for their root servers that give a good price / performance ratio. They are a suitable opportunity as a hardware provider when you know what you are doing on a server level.

With the ‘go-cloud’ hype-train, hetzner added an own solution a while ago: The hetzner cloud provides a web interface to click differently sized server instances on the fly and pay for them minute-based. They also provide a programming API to do that code-based. This is similar to what AWS, google cloud and others offer on this level.

In the end, hetzner cloud is a great solution to host stuff like those auto-scaling test runners or small instances like the gerrit-adapter - especially when further dedicated test hardware is also hetzner based.

DevOps tooling

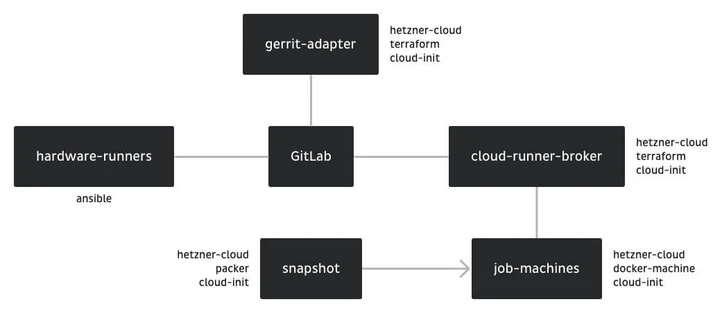

The GitLab instance is maintained by the server team. We’ll not have a closer look at its setup and maintenance. The runner setup however is managed by the core team. Let’s have a look which DevOps tools we’re using to deploy the infrastructure:

- ansible: As one of the “configure my machine” tools, ansible is used to set up the hardware runners. When a machine is in hetzner “rescue system”, a first role installs the base system and reboots, a second role updates packages and reboot again, the third rule then installs the gitlab-runner and some additional docker services (more about that later) and registers the runner in GitLab. This allows quick initialization of new or failing hardware runners.

- terraform: This is a “Infrastructure as code” tool. The company HashiCorp is probably best known for vagrant. terraform is for those “everything is cloud” applications: A full cloud setup is specified with it. Hetzner provides a terraform plugin to use its cloud API. As a main difference to a tool like ansible, terraform has state: It knows the current cloud layout and can adapt things to be in line with the specification. terraform is used to deploy the gerrit-adapter and the cloud-runner-broker to hetzner cloud.

- packer: Create cloud machine snapshots. The cloud job-machines started by the broker use a prepared snapshot, managed by packer. terraform finds the latest one and tells cloud-runner-broker which one to use.

- cloud-init: Customize cloud instances. cloud-init is by default installed on hetzner cloud machine images. It’s basically a YAML file that allows creating configuration files and script execution during boot. Most magic is within these files: terraform substitutes variables in those scripts and feeds them to the machines.

- docker: Of course. The entire testing is done within docker. Actually they’re done with a nested docker-in-docker setup. Nicely isolated with sysbox. docker also runs the two helper services minio and registry. We’re providing a set of test related custom images, mostly PHP images that include needed modules.

Summary

This part gave a rough overview of involved systems, their management and used tools.

Special thanks goes to Stefan Wienert for this blog post, and to TYPO3 community member Marc Willmann for this blog post.

Next part will dive deeper into the rabbit hole by looking at the docker setup used for testing.